Having moved my blog over to

MiniBlog,

things have been going well. The site seems fast and is certainly

simple, which tick the main two boxes which prompted the migration from

Wordpress. Now that the site has been established for a few weeks, I

wanted to see how MiniBlog copes under load (not that it is needed as

currently I get very few visits per month).

I will be using loader.io to perform the tests. I

have no affiliation with loader.io and simply chose them because it was

a service that I had heard of.

My blog is running in Azure on a Shared hosting plan.

Testing

After signing up for a free account, I started testing. I don’t have

much experience with load testing, so I was relying on loader.io to

guide me through the process. It is very simple to create a new test

and get started and they even give you some sensible defaults. So I

just jumped right in.

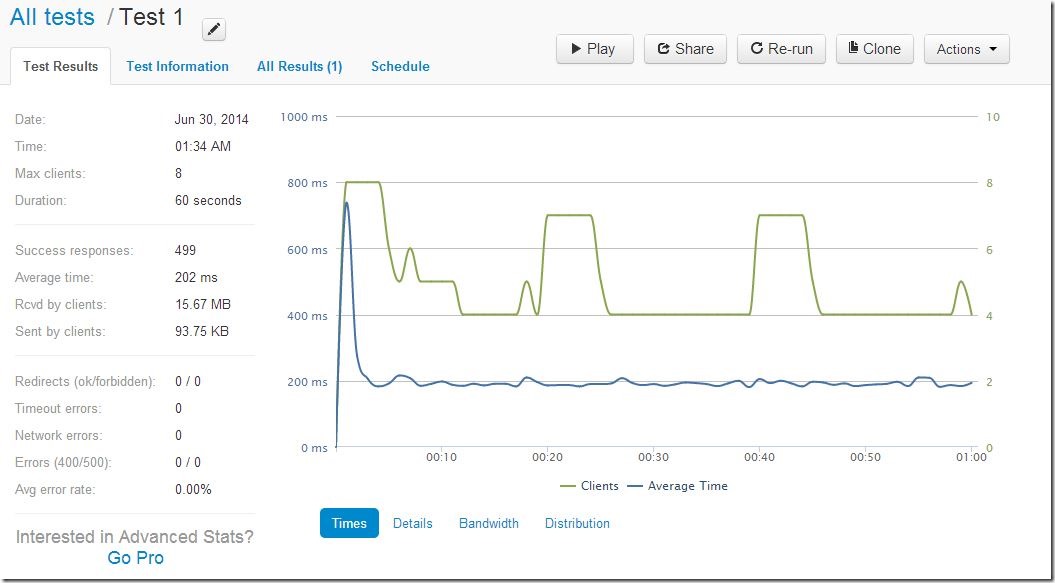

Test 1

Test Type: Clients per test\ Number of Clients: 250\ Duration: 60s\ URLs: 2 x GET requests

In english, the test was “How does my server perform when 250 users

connect over the course of 1 minute?”. This is quite a basic test to

get us started. The results from loader.io are below.

So the average response time was 202ms. There were no timeouts or

errors. There is an initial spike at the start, but that soon settles

down, producing a consistent response time of just under 200ms.

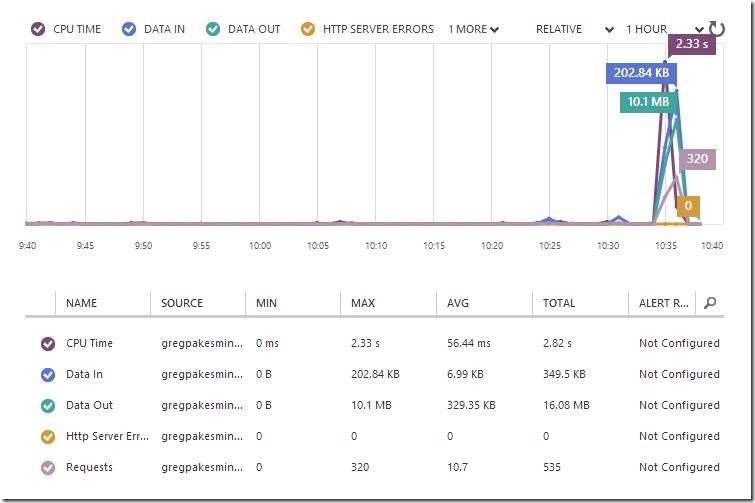

What did the Azure Portal see during this minute?

Azure is showing a big peak in usage during the minute load testing

occurred. It doesn’t look like we are stressing things much, so lets

turn things up a bit.

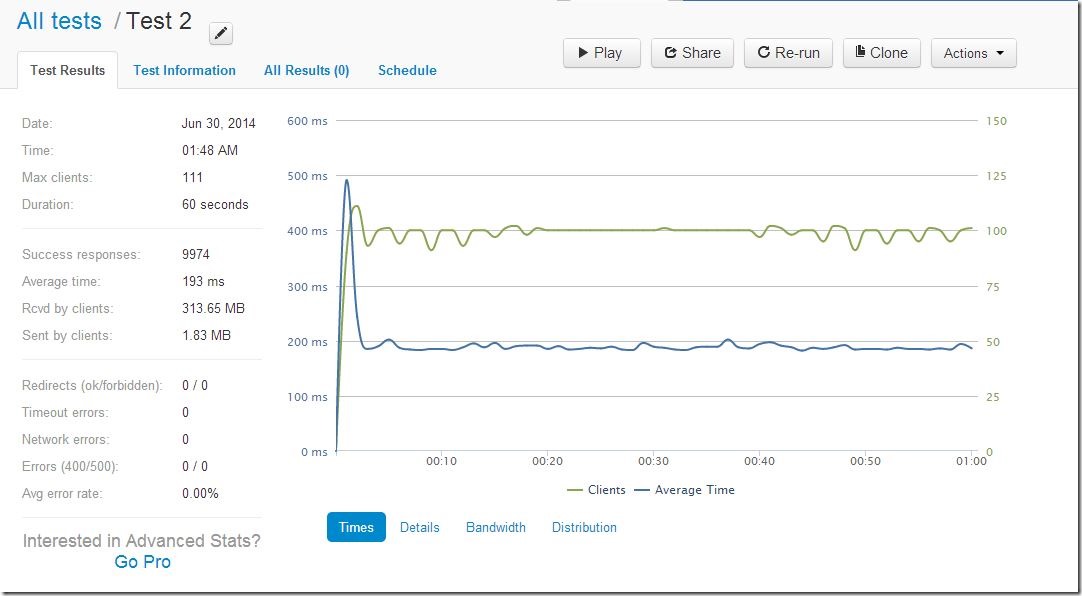

Test 2

Test Type: Clients per test\ Number of Clients: 5000\ Duration: 60s\ URLs: 2 x GET requests

In english, the test was “How does my server perform when 5000 users

connect over the course of 1 minute?”. This is quite a basic test to

get us started. The results from loader.io are below.

The story here is much the same as test 1. We increased the number of

concurrent connected clients from 4-6 to 90-100 and there was very

little difference. Again, so far so good.

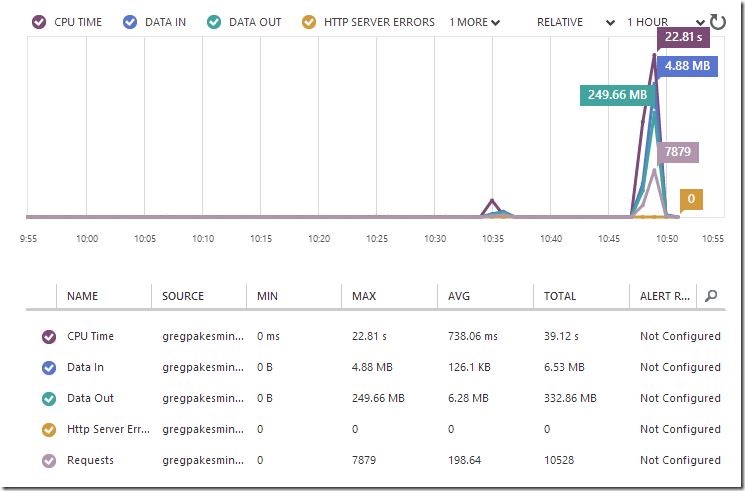

Lets take a look at the azure portal.

The previous peak of Test 1 at 10:35, is now eclipsed by the new peak

from Test 2. This was clearly a big increase, but I don’t think we are

stressing MiniBlog or Azure enough yest.

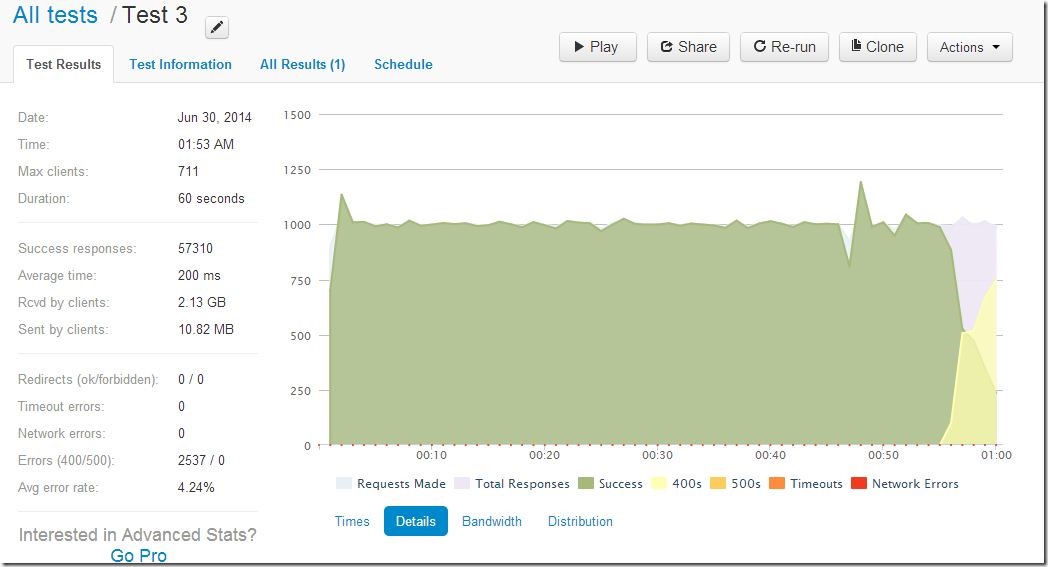

Test 3

Test Type: Clients per second\ Number of Clients: 500\ Duration: 60s\ URLs: 2 x GET requests

In english, the test was “How does my server perform when 500 users

connect every second for 1 minute?”. This is quite a basic test to get

us started. This is clearly a more substantial test. The results from

loader.io are below.

Once again, this is a significantly bigger amount of load than the

previous test. We are connecting 500 users every second for a minute.

The total egress data was 2.1 GB. This is a level of load that my blog

is definitely not used to. As you can see there were 2537 errors in

this test. They were 400 http errors. Changing the graph, we get a

better idea of what happened.

As you can see, towards the end of the test, the successes started

changing into 400s. As it stands logging is disabled in my Azure

website, so I think it is time to turn it on. I enabled Application

Logging and Web Server Logging.

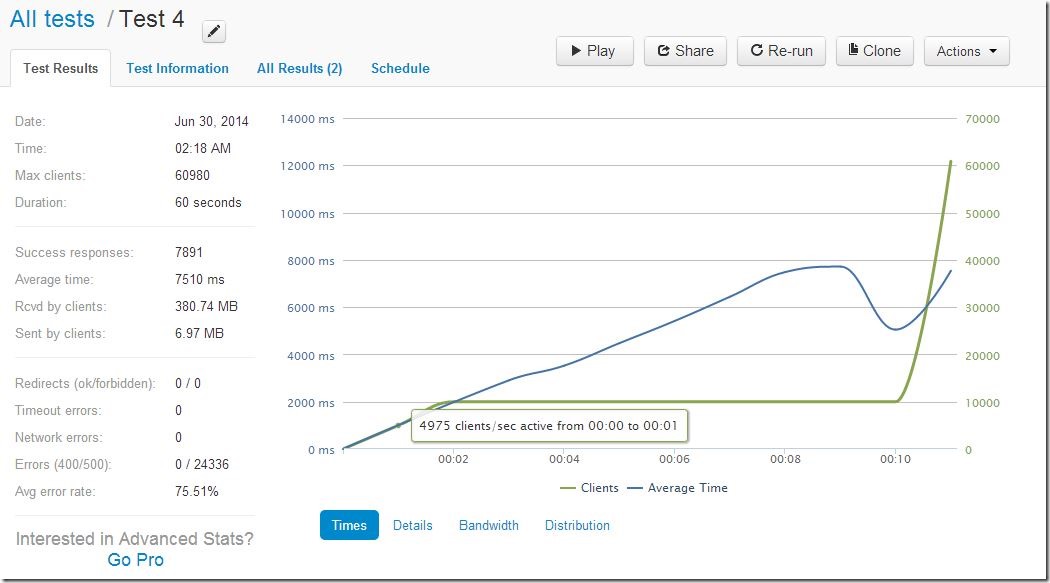

Test 4

Test Type: Clients per second\ Number of Clients: 5000\ Duration: 60s\ URLs: 2 x GET requests

In english, the test was “How does my server perform when 500 users

connect every second for 1 minute?”. This is quite a basic test to get

us started. This is clearly a more substantial test. The results from

loader.io are below.

This test only lasted 10 seconds before stopping due to the number of

errors. Looking through the logs, the request seem to go through ok for

a time, but then soon start returning 503 Service unavailable. At this

point, I looked through the Azure logs that I enabled earlier, but was

unable to find anything specific. I am presuming that the 503 is caused

by the application crashing under the load, but I have very little

insight into the issue other than the obvious.

It seems that 5000 clients per second is just going to be too much

traffic. Seasoned load testers among you may think this is obvious, but

this was an exploratory exercise for me to see what load a shared azure

website would be able to cope with.

I am hoping to follow this up with another test, increasing the number

of instances to see where the true boundaries really lie.

Thoughts on loader.io

This has been my first encounter with loader.io. I have only signed up

for a free account and haven’t delved into the depth of what it can do.

However, I must say it was very simple to get started. I was able to

setup some load tests in seconds and start firing requests at my

application.

When the application began erroring, I found it hard to find out why.

Loader.io, didn’t seem to offer anything beyond HTTP response codes, but

maybe that is because I am only using the free version. It also

grouped all errors into either 400s or 500s. I’m not sure I agree with

that strategy as a 500 error is very different from a 503 and it doesn’t

allow for any instant root cause analysis.

I will hoping to extend my use of loader.io in future projects.